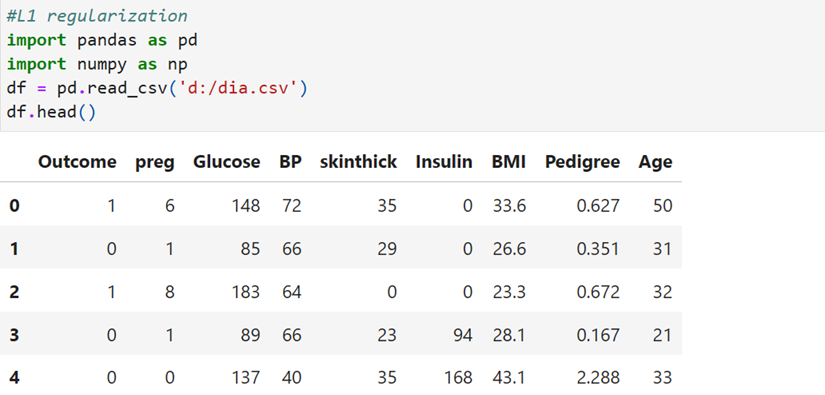

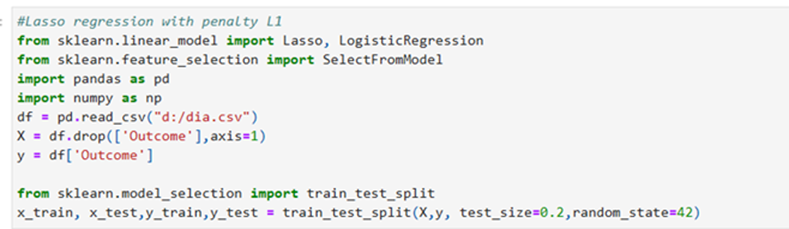

Load Libraries and Data and Split the data

Download the data from https:// https://github.com/npradaschnor/Pima-Indians-Diabetes-Dataset/blob/master/diabetes.csv

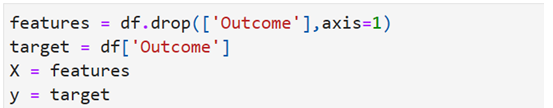

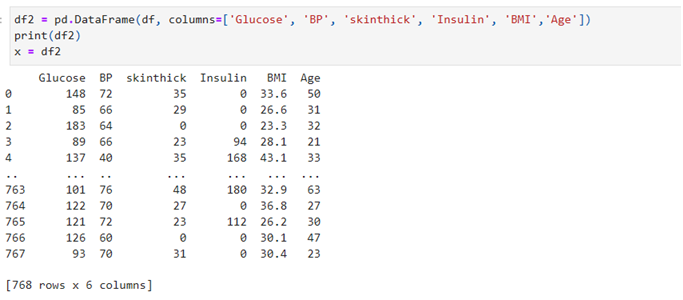

Separate feature and target(Outcome)

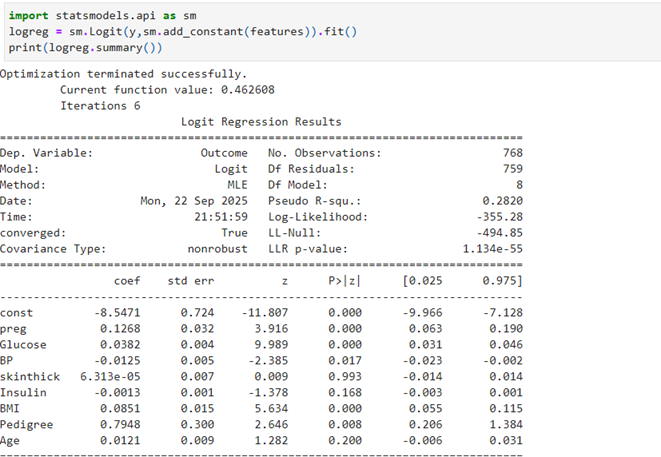

Logistic Regression using Original Data

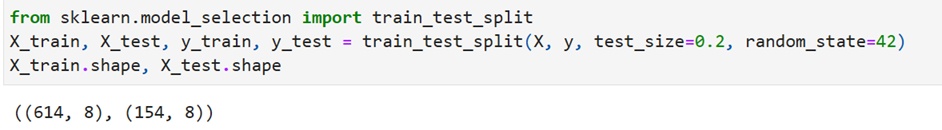

Split the data into train and test datasets

Import Lasso Model

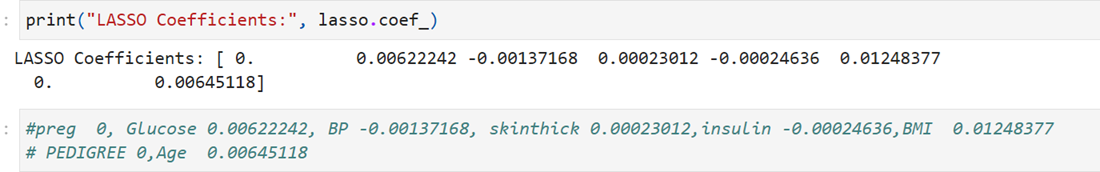

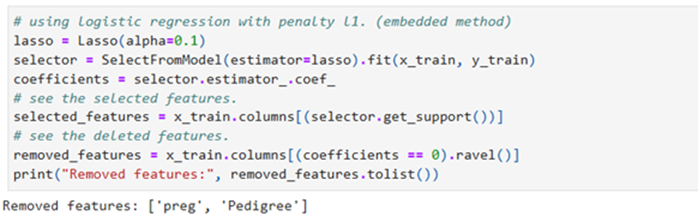

Find Lasso Coefficients

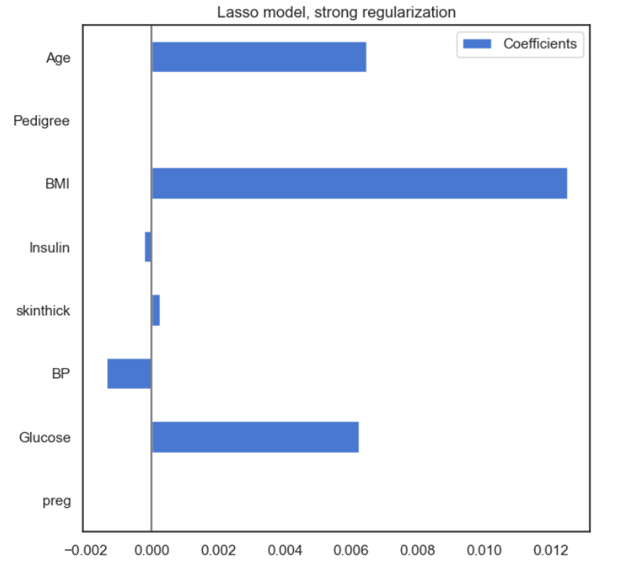

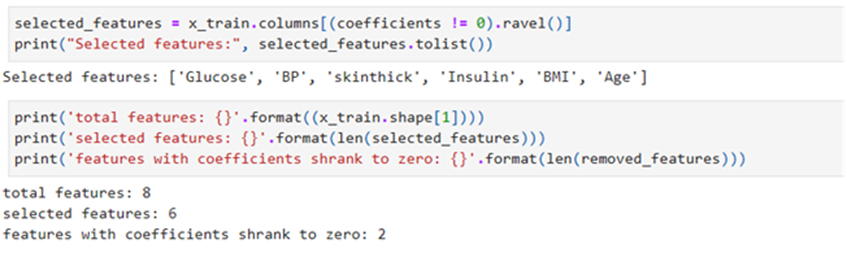

The features which have O coefficients will be removed from the dataset. Removed items are preg and pedigree. So Selected features are the remaining 6 features

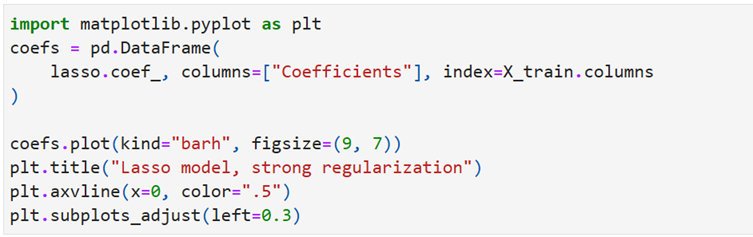

Coefficient Horizontal Bar Chart

Selection of features using Lasso – Logistic Regression

Removed Features

Show Selected features and print Report

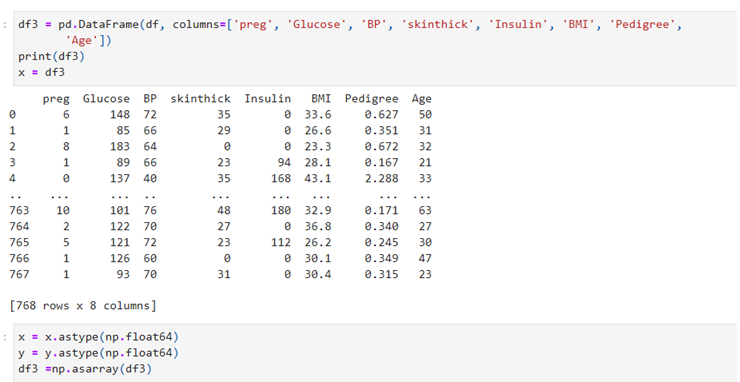

Show selected features in a data frame

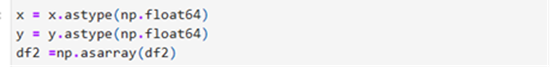

Convert data frame into an array

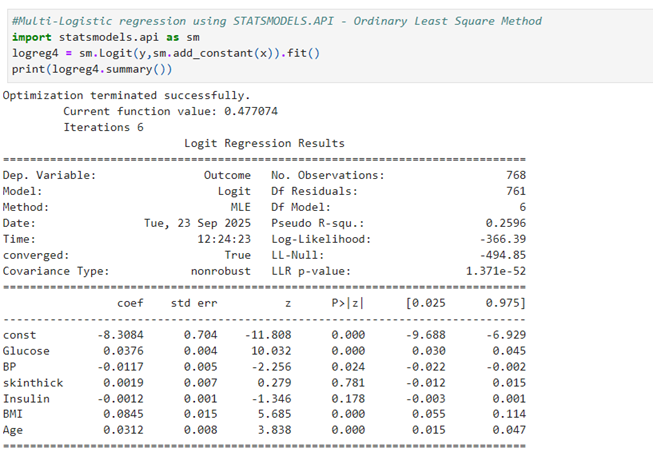

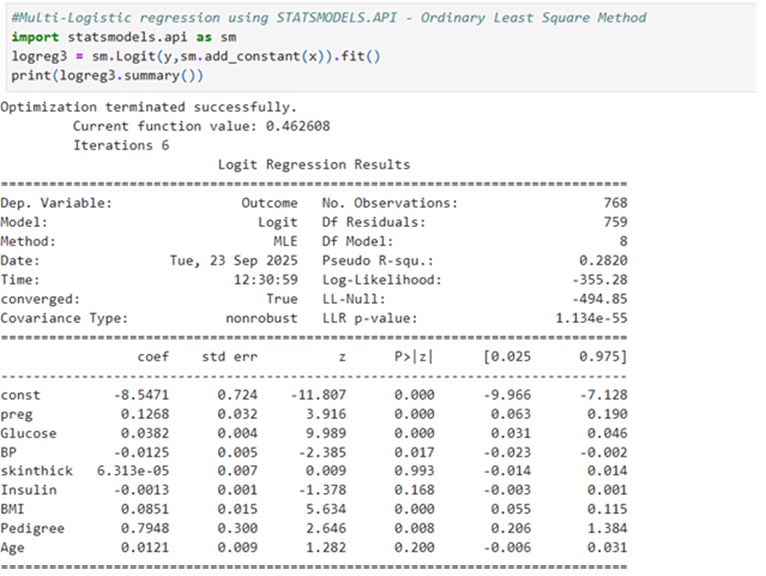

Using selected features, Predict (using OLS-Statsmodels.api)

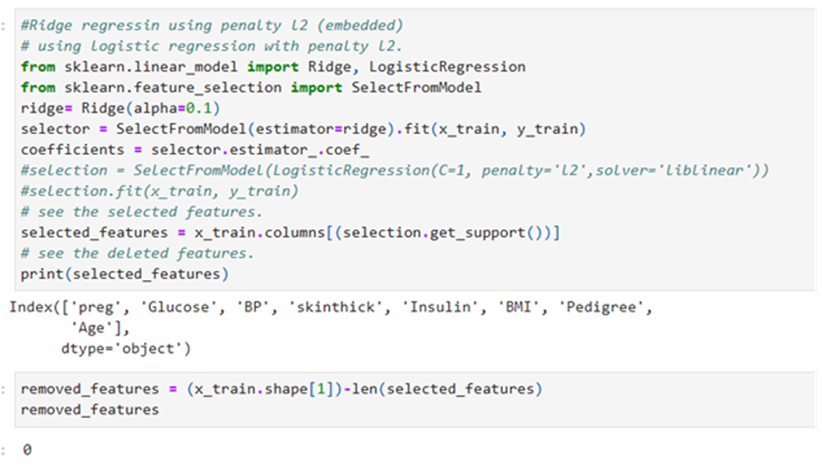

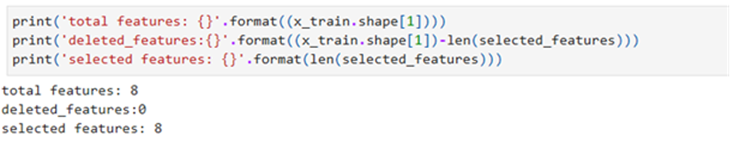

For comparison purpose: Ridge Regression with Penalty L2

This technique smoothens and not removes the features which have shrunk to zero. Only Lasso shrinks the feature coefficients to zero and removes the features which have been shrunk to zero.

Print Report Under Ridge

Convert selected features into data frame and convert to an array

Logistic Regression using statsmodels.api with selected features

NOTES:

- In Ridge regression, no features are removed because Ridge applies L2 regularization, which penalizes large coefficients but does not shrink them to exactly zero.

- This is in contrast to Lasso regression, which uses L1 regularization and can shrink some coefficients to zero, effectively performing feature select

- For removing features in Ridge L2 regularization we have to use Variance Threshold, Recursive Feature Elimination (RFE),or mutual information-based methods.