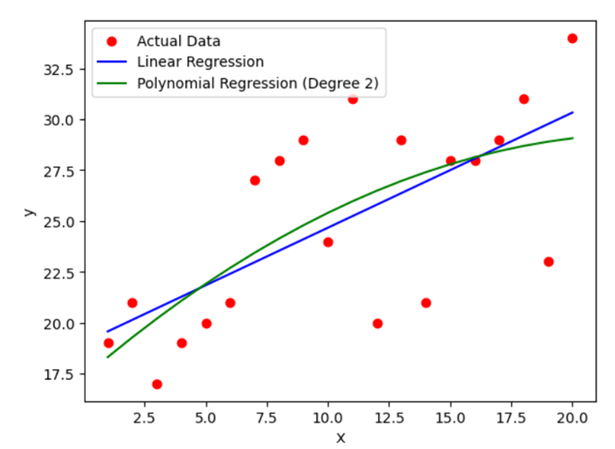

Polynomial encoder in machine learning, transforms input features into polynomial features. This allows the model to capture non-linear relationships between features and the target variable, improving predictive accuracy in situations where a simple linear model is inadequate.

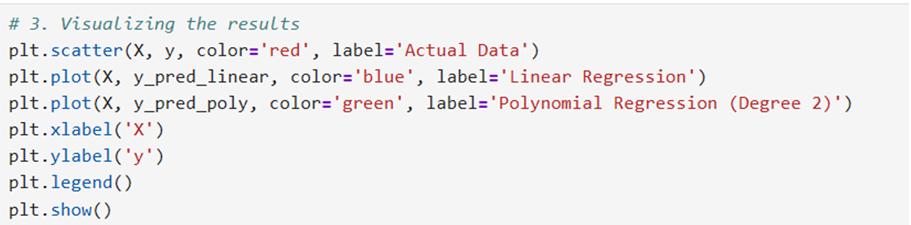

Let us have a dataset where the relationship between a feature (e.g., age) and a target variable (e.g., price) is not a straight line but rather a curve. Traditional linear regression models struggle to capture such non-linear patterns.

Solution:

- A polynomial encoder creates new features by raising existing features to different powers (e.g., squaring, cubing, etc.).

- For example, if you have a feature ‘x’, you might create new features like ‘x^2’, ‘x^3’, ‘x^4’, and so on.

- These new polynomial features are then used in the regression model, allowing it to fit a curved line instead of a straight line.

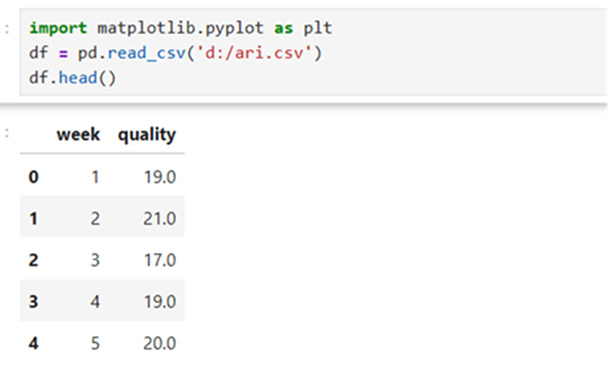

Load Libraries and read data

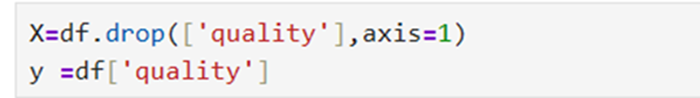

Separate target variable and feature variable:

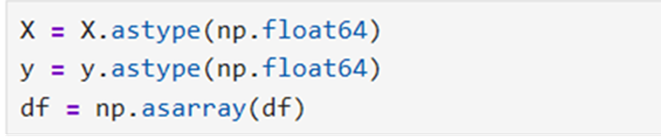

Convert the variables into float

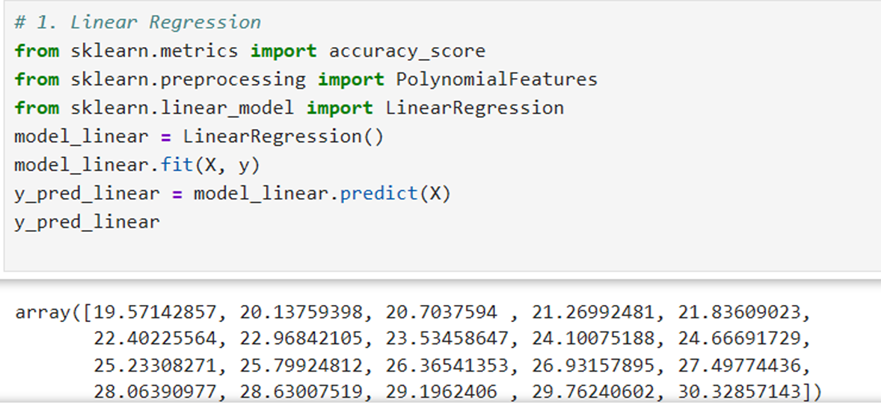

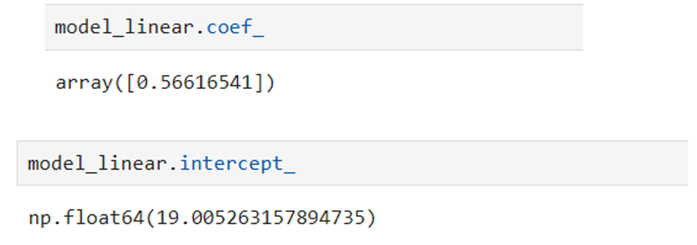

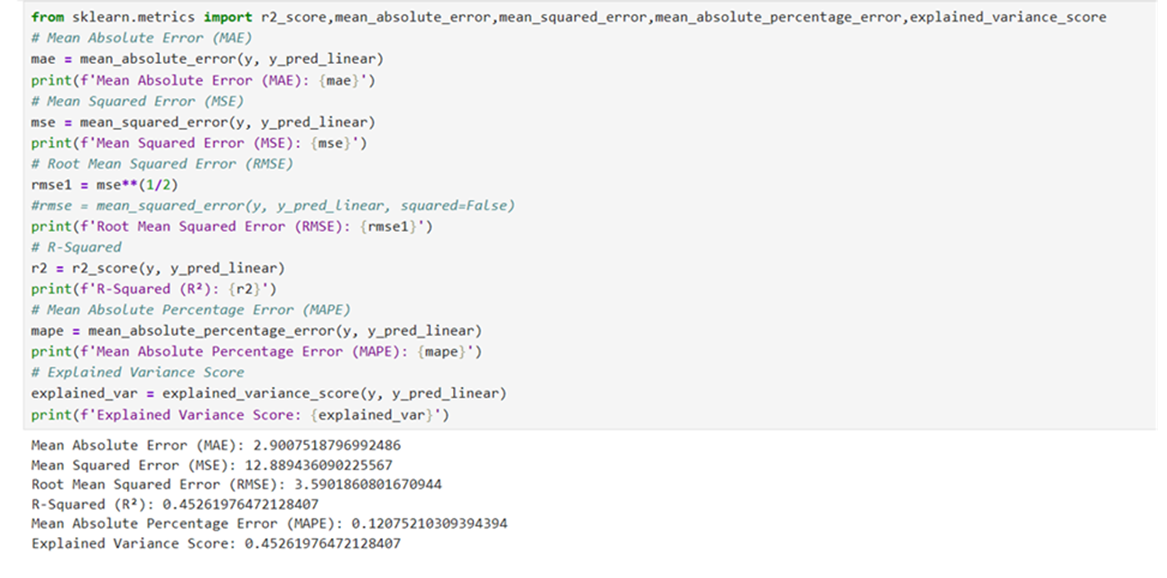

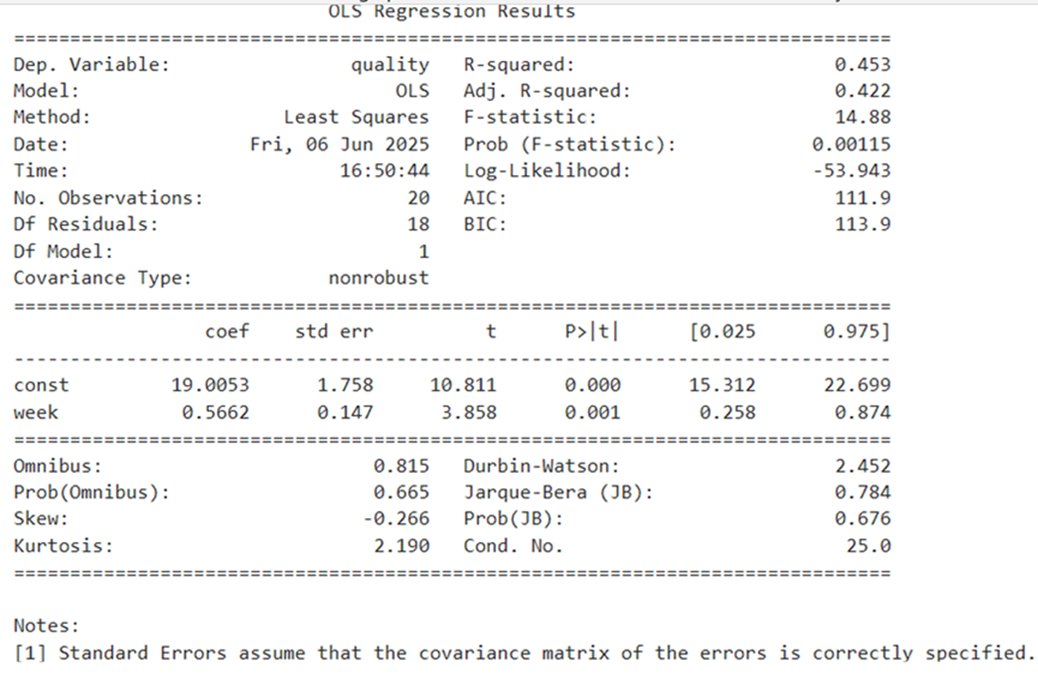

Linear Regression:

Linear and Polynomial regressing using SKLEARN