Use the same dataset dia.csv (diabetic.csv)

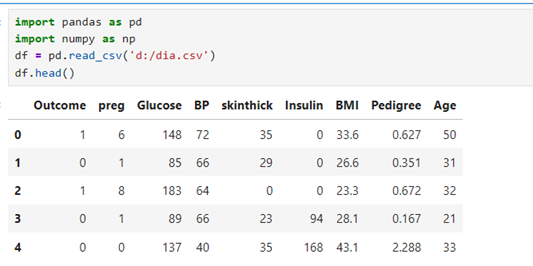

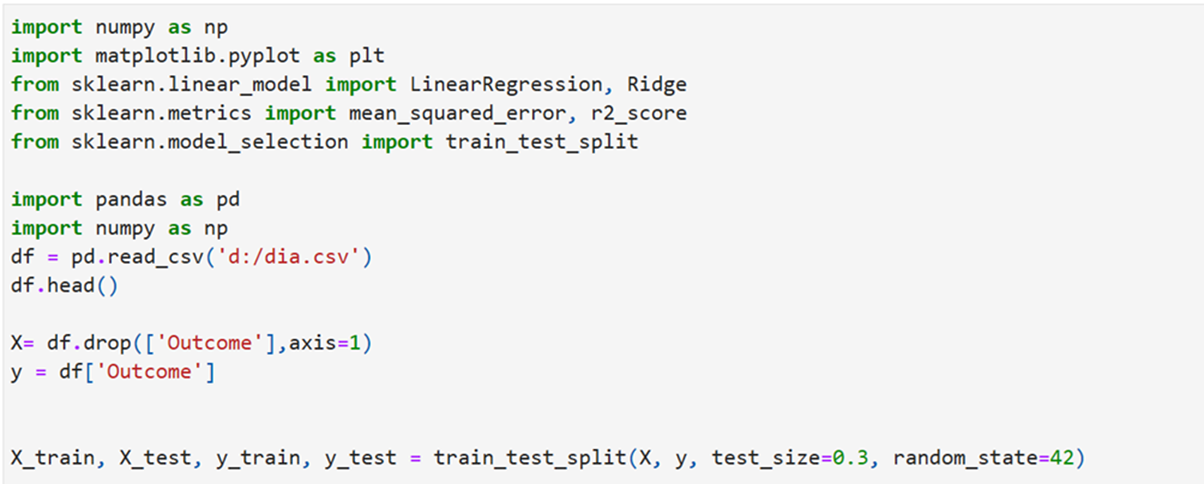

Load Libraries and read data

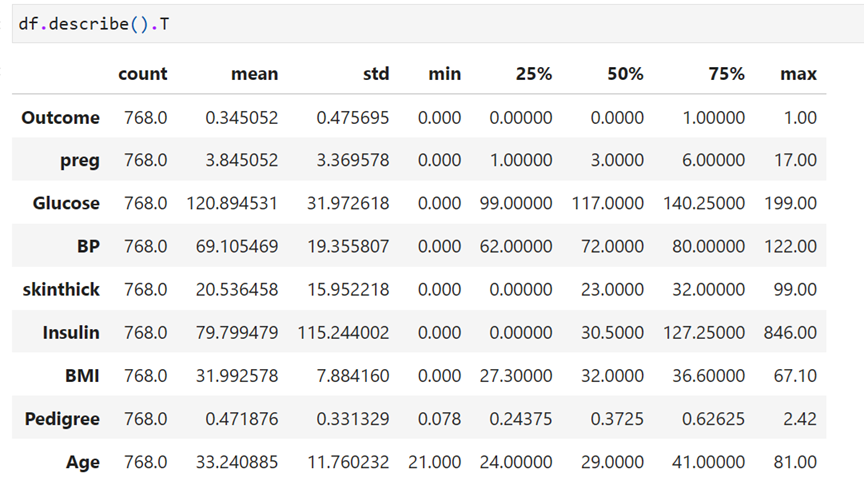

Descriptive Statistics

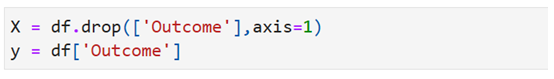

Separate feature(X) and dependent variable Outcome

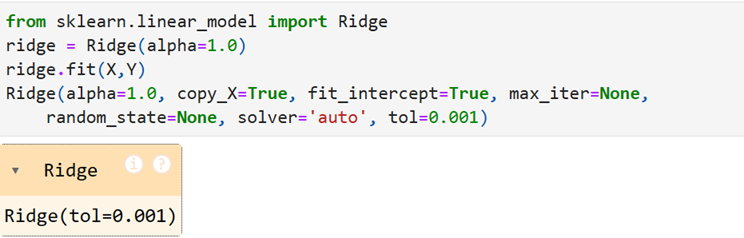

Ridge Algorithm

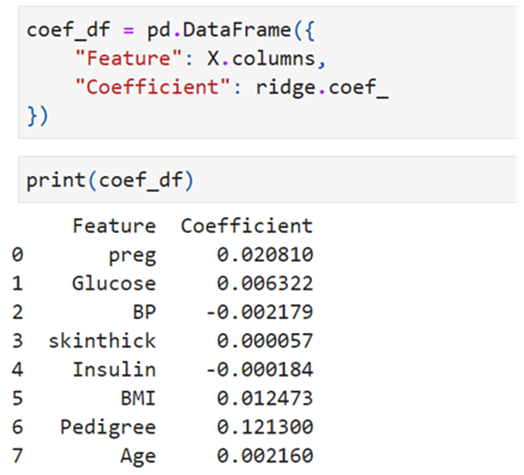

Find coefficients:

yhat(outcome) = 0.021 * preg + 0.006 * Glucose – 0.002 * BP + 0.0 * skinthick – 0.0 * Insulin + 0.012 * BMI + 0.121 * Pedigree + 0.002 * Age

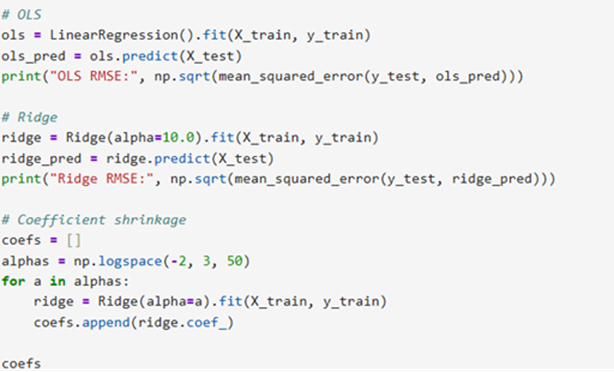

Method 2:

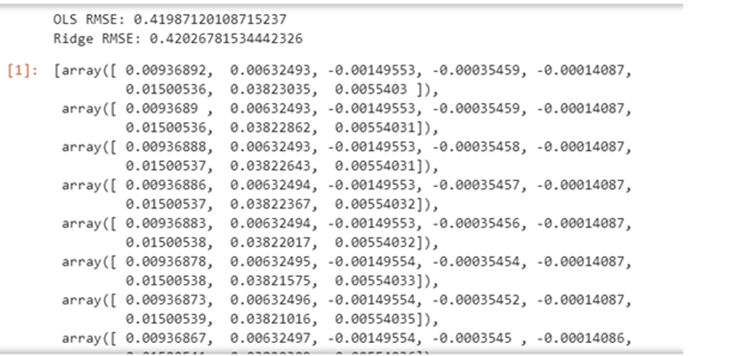

Root Means Square Error has improved from OLS to Ridge

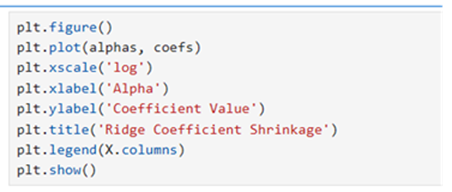

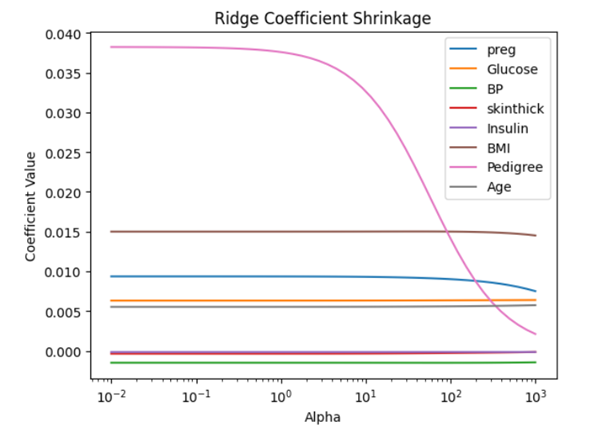

Plot alphas and coefs

FEATURE SELECTION UNDER L2 REGULARISATION

Recursive Feature Elimination Technique for L2 regularization

- It is a popular feature selection technique used in machine learning. It works by iteratively removing the least relevant features based on a model’s performance.

- Ultimately selects the most informative subset of features.

- RFE can be applied to various models like linear models, support vector machines and decision tress

- Improves model accuracy

- Includes all predictors and computes importance score for each predictor and allows for systematic elimination of less important features.

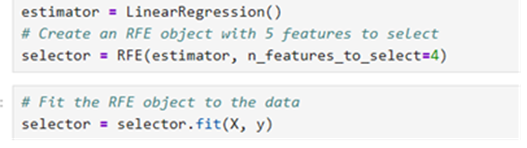

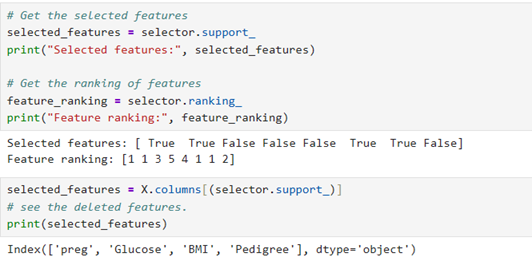

RFE

Selected features using RFE

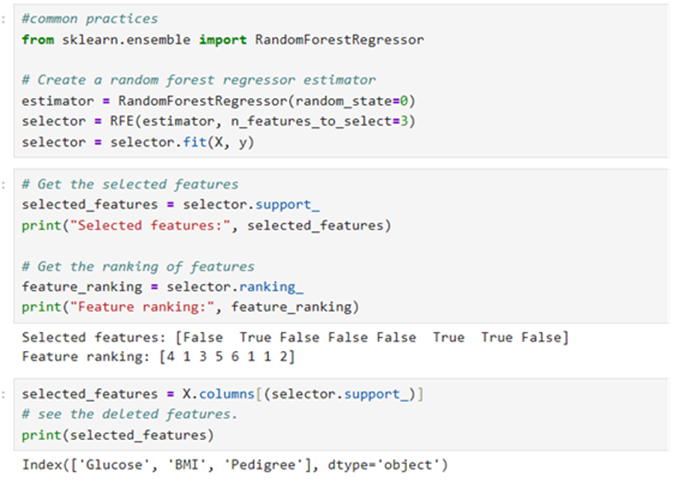

RFE USING RANDOM FOREST REGRESSOR ESTIMATOR

We specify n_features_to_select as 3. So system has selected three features only

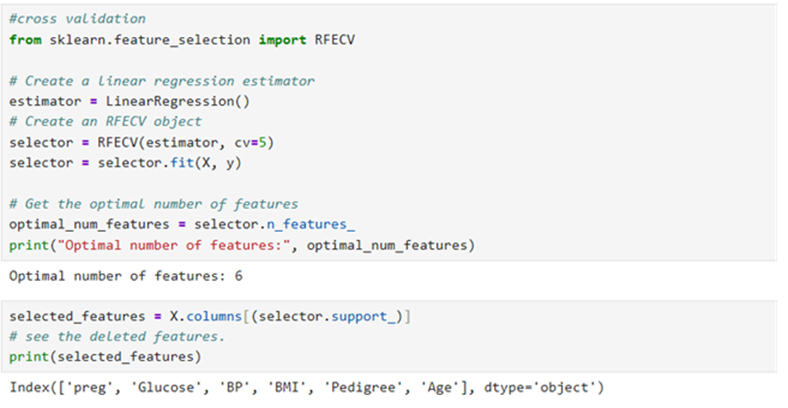

RFE USING RFECV (Cross Validation) Technique

System calculate the optimal number of features as 6. so selected features works out to 6

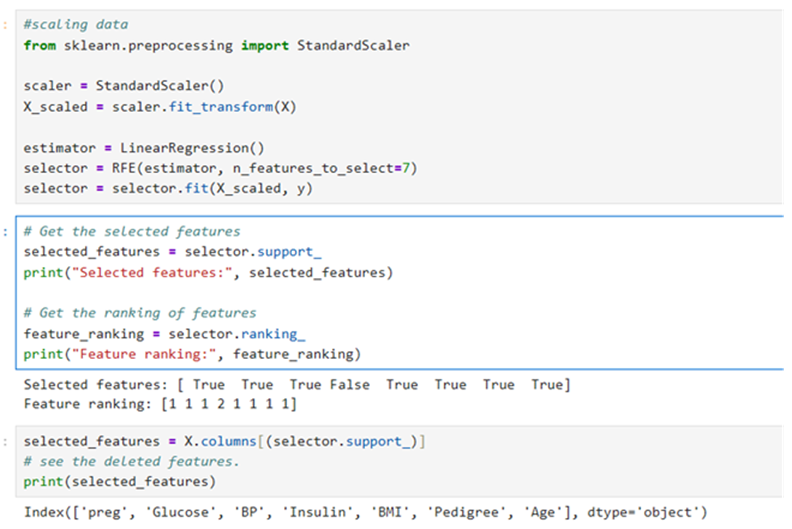

Feature selection when you use scaled data using standard scaler function

here we instruct the system to select 7 features.